Instruments for disaster preparedness evaluation: a scoping review

Measuring disaster preparedness has been a challenge as there is no consensus on a standardised approach to evaluation. This lack of clear definitions and performance metrics makes it difficult to determine whether past investments in preparedness have made sense or to see what is missing. This scoping review presents publications addressing the evaluation of disaster preparedness at the governmental level. A literature search was performed to identify relevant journal articles from 5 major scientific databases (Scopus, MEDLINE, PsycInfo, Business Source Premier and SocINDEX). Studies meeting the inclusion criteria were analysed. The review considered the multi-disciplinarily of disaster management and offers a broad overview of the concepts for preparedness evaluation offered in the literature. The results reveal a focus on all-hazards approach as well as local authority level in preparedness evaluation. Variation in the types of instruments used to measure preparedness and the diversity of questions and topics covered in the publications suggest little consensus on what constitutes preparedness and how it should be measured. Many assessment instruments seem to lack use in the field, which limits feedback on them from experts and practitioners. In addition, tools that are easy to use and ready for use by practitioners seem scarce.

In March 2015, 187 United Nations member states ratified the Sendai Framework for Disaster Risk Reduction 2018-2030 (UNISDR 2015) that formulated future needs and priorities for disaster risk management around the world. Priority 4, ‘Enhancing disaster preparedness for effective response and to “Build Back Better” in recovery, rehabilitation and reconstruction’ states the importance of the ‘[…] further development and dissemination of instruments, such as standards, codes, operational guides and other guidance instruments, to support coordinated action in disaster preparedness […]’ (UNISDR 2015, p.22).

Although preparedness is considered to be of high priority and importance, there is no universal guide or definition on disaster preparedness (e.g. what it comprises or how to achieve preparedness) (McEntire & Myers 2004, McConnell & Drennan 2006, Staupe-Delgado & Kruke 2017). A commonly used definition by the United Nations explains preparedness as:

The knowledge and capacities developed by governments, response and recovery organizations, communities and individuals to effectively anticipate, respond to and recover from the impacts of likely, imminent or current disasters. (UNISDR 2016, p.21).

However, standards for disaster preparedness are scarce and this lack of guidance makes collecting data about and assessing preparedness difficult. This is shown in the UNISDR definition that there are different units of analysis for preparedness.

Despite attempts to develop preparedness measures, there remains a lack of consensus and, consequently, a research gap about how preparedness evaluation should be done (Savoia et al. 2017, Khan et al. 2019, Belfroid et al. 2020, Haeberer et al. 2020). In 2005, Asch and co-authors (2005) concluded that existing tools lacked objectivity and scientific evidence, an issue that persists. Objective evaluation would allow for intersubjective comparability of preparedness. Savoia and co-authors (2017) analysed data on research in public health emergency preparedness in the USA between 2009 and 2015. Although there was a development of research towards empirical studies during that period, some research gaps remained, such as development of criteria and metrics to measure preparedness. Qari and co-authors (2019) reviewed studies conducted by the Preparedness and Emergency Response Research Centers in the USA between 2008 and 2015 that addressed criteria for measuring preparedness. They concluded that a clear standard was still lacking and guidance for the research community in developing measures would be needed. Haeberer and co-authors (2020) evaluated the characteristics and utility of existing preparedness assessment instruments. They found 12 tools, 7 of them developed by international authorities or organisations and a further 5 developed by countries (1 x England, 1 x New Zealand and 3 x USA). In their study, Haeberer and co-authors (2010) identified a lack of validity and user-friendliness. Thus, the literature shows that it remains critical to establish commonly agreed and validated methods of evaluation that help to define preparedness, identify potential for improvement and set benchmarks for comparing future efforts (Henstra 2010, Nelson, Lurie & Wasserman 2010, Davis et al. 2013, Wong et al. 2017).

Due to the relative rarity of disasters, it is unclear whether emergency plans and procedures are appropriate, whether equipment is functional and whether emergency personnel are adequately trained and able to undertake their duties (Shelton et al. 2013, Abir et al. 2017, Obaid et al. 2017, Qari et al. 2019). At the same time, a false sense of security due to unevaluated disaster preparedness strategies could lead to greater consequences from disasters (Gebbie et al. 2006). Ignorance about the status and quality of disaster preparedness impedes necessary precautionary measures and can cost lives. Moreover, the lack of proper evaluation poses a risk that mistakes of the past are not analysed, adaptations in procedures are not made and mistakes might be repeated (Abir et al. 2017). As Wong and co-authors (2017) stressed there is ‘a moral imperative’ to improve methods of assessing preparedness and raise levels of preparedness to diminish preventable.

The Sendai Framework for Disaster Risk Reduction 2015-2030 underlines the social responsibility of academia and research entities to develop tools for practical application to help lessen the consequences of disasters (UNISDR 2015; Reifels et al. 2018). In addition, the Sendai Framework highlighted the important role of local governments in disaster risk reduction. Their understanding of local circumstances and the affected communities gives them valuable insights and the best chance of implementing measures (Beccari 2020). This scoping study offers emergency and disaster planners as well as researchers an overview of existing concepts and tools in the literature for preparedness evaluation at the government level. For planners, thorough evaluation of preparedness contributes to improved outcomes for people and reduced deaths, reduces costs for response and recovery and helps with future investment decisions (FEMA 2013). Evaluation can serve as performance records as well as provide an argumentation basis in negotiations for further (financial) resources. For researchers, this scoping review helps to compile evaluation concepts and identify conceptional gaps.

This study used a scoping-review approach as this method allowed an examination of a wide range of literature to identify key concepts and recognise gaps in the current knowledge (Arksey & O’Malley 2005). Scoping reviews generally aim to map existing literature regardless of the study design reported and without any critical appraisal of the quality of the studies (Peters et al. 2015).

For the review process, a 5-stage framework was followed to conducting scoping reviews as presented by Arksey and O’Malley (2005). The research was guided by a broad question: ‘What is known in scientific literature about the evaluation of disaster preparedness on the governmental level?’ Other questions were: ‘Which tools or concepts are available for evaluating disaster preparedness?’ and ‘Have these tools been tested in the field or used in disaster management?’ A scoping review method, which does not exclude any particular methods or assess study quality, was chosen because it provides as broad an overview of the existing tools and concepts as possible. The term ‘concept’ here means theoretical work that describes what preparedness evaluation should look like and what it encompasses, whereas ‘tools’ refers to actual ready-to-use instruments.

The search was conducted in 5 academic databases (Scopus, MEDLINE, APA PsycInfo, Business Source Premier and SocINDEX) covering public health, disaster management and social sciences. Searches were conducted in December 2018 with an update conducted in May 2021. The databases were selected as they are multi-disciplinary and encompass a wide range of research fields. In an initial step to gain an understanding of the material and terminology, various quick-scan searches were conducted in the databases as well as academic journals addressing disaster management and public health preparedness. This was followed by searches within the fields of ‘Title’, ‘Abstract’ and ‘Keywords’ as adapted to the specific requirements of each database using the following search terms: ‘disaster preparedness’ OR ‘emergency preparedness’ OR ‘crisis preparedness’ AND ‘assess*’ OR ‘evaluat*’ OR ‘measur*’ OR ‘indicat*’ AND NOT ‘hospital’.

The terms ‘disaster’, ‘crisis’ and ‘emergency’ are often used synonymously in the literature (Gillespie & Streeter 1987, Sutton & Tierney 2006, Hemond & Benoit 2012, Staupe-Delgado & Kruke 2018, Monte et al. 2020), thus these terms were used in all the searches. Additionally, a search of Google Scholar including the first 100 records was conducted. The reference lists of the examined full papers were searched manually to identify additional, relevant published works not retrieved via the databases search. The literature sample was restricted to articles published between 1999 and 2021 in either English or German languages. Some relevant articles may have been excluded from this review due to these selection factors. All citations were imported into Endnote and duplicates were removed.

At the first stage of screening, the title and abstract of each published work were reviewed against eligibility criteria. Reasons for exclusion were:

Only papers that were clearly irrelevant for the study’s purpose were removed at the stage of screening titles and abstracts. The papers determined eligible for full-text review were checked by 2 researchers independently. The research team met throughout the screening process to discuss uncertainties regarding the inclusion and exclusion of works from the sample.

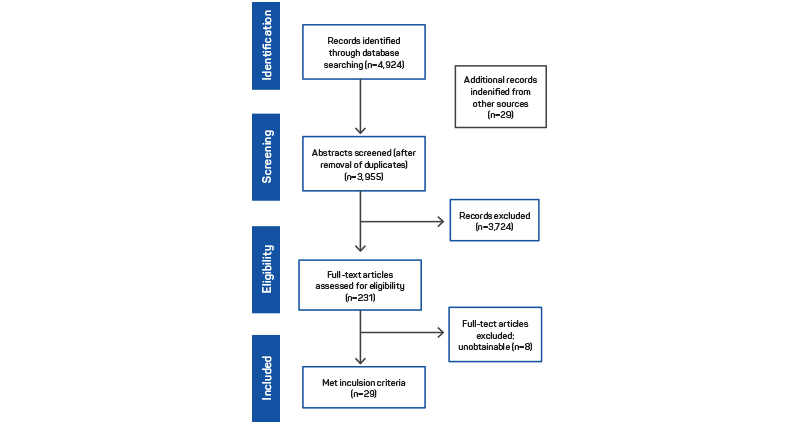

The database search returned 4,924 references. Removal of duplicates lead to a total of 3,955. Of these, 29 were added from the Google Scholar search and from a snowballing analysis of the reference lists of the included works. Screening abstracts led to the exclusion of 3,724 records. Of the remaining 271 full-text works that were assessed for eligibility, 29 me t all inclusion criteria for analysis, although 8 works were not available in full text. The search methodology is illustrated in Figure 1 and the analysis of the 29 integrated studies is detailed in Table 1.

The geographic distribution of the sample shows that the majority (n=15) of articles focused on preparedness evaluation in the USA. Two studies were from the Philippines and one each dealt with preparedness evaluation in European countries, Mexico, Canada, Indonesia, China, Saudi Arabia and Brazil. Two studies conducted case studies in Italy and France as well as Chile and Ecuador. Three conceptual works that addressed theoretical frameworks instead of instruments (Henstra 2010, Diirr & Borges 2013, Alexander 2015) did not specify a country in their descriptions.

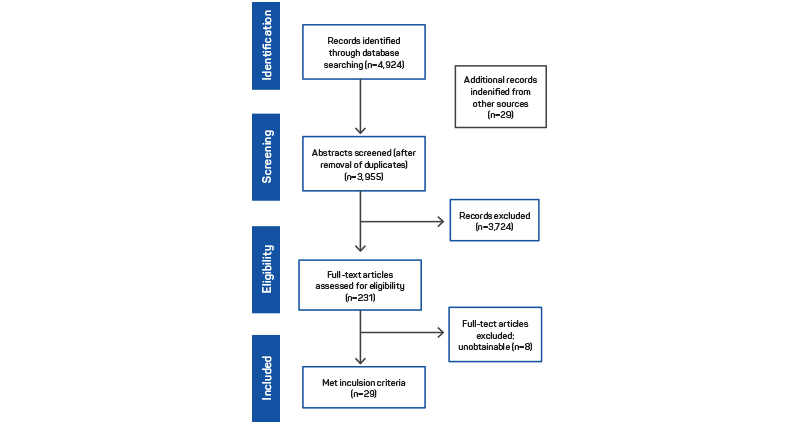

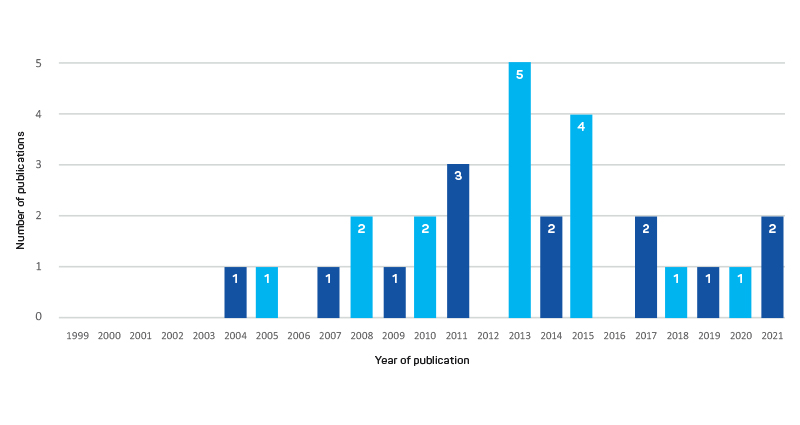

Figure 2 graphs the number of papers in the sample by year of publication.

The majority (n=14) of the studies took an all-hazards approach and 2 studies chose natural hazards as the research scope. One study for each hazard type was selected for radiation, bioterrorism, meteorological disasters and flood. One study addressed flood, typhoon and earthquake. Eight studies did not specify a hazard type.

Fourteen studies chose the local authority level for evaluation. Seven studies were at a state level and 2 were at the regional level. Three studies covered more than one level. Three studies did not specify a level of analysis.

In 23 works, the studies followed a quantitative approach and 12 included case studies based on the use of their assessment tools. Five studies are conceptual and offered theoretical frameworks, which could be used as a basis for setting up evaluation of disaster preparedness. One study used a qualitative approach by applying document review in combination with in-depth interviews.

An analysis of the topics in the studies revealed a wide variety of categories and categorisation schemes. For the following description, terms that were most commonly used within the studies as keywords were chosen. For reasons of clarity and comparability, only the main categories of the instruments were analysed and subcategories were not part of the review.

Some categories emerged in the sample only once or twice, either because the instruments were designed to analyse a particular topic or because the analyses were so superficial and generic that the categories were mentioned only once. Categories that occurred less than 3 times in the studies are not listed. Agboola and co-authors (2015) use 160 general tasks in their measurement tool, however, a description of topics covered was not stated.

| Category | n | Authors |

| Communication and information dissemination | 14 | (Somers 2007; Jones et al. 2008; Shoemaker et al. 2011; Shelton et al. 2013; Davis et al. 2013; Dalnoki-Veress, McKallagat & Klebesadal 2014; Djalali et al. 2014; Alexander 2015; Schoch-Spana, Selck & Goldberg 2015; Connelly, Lambert & Thekdi 2016; Murthy et al. 2017; Juanzon & Oreta 2018; Amin et al. 2019; Khan et al. 2019) |

| Plans and protocols (some including testing and adaptation of plans**) | 14 | (Mann, MacKenzie & Anderson 2004; Alexander 2005, 2015; Somers 2007; Simpson 2008; Henstra 2010; Watkins et al. 2011; Davis et al. 2013; Dalnoki-Veress, McKallagat & Klebesadal 2014; Connelly, Lambert & Thekdi 2016; Juanzon & Oreta 2018; Khan et al. 2019; Dariagan, Atando & Asis 2021; Greiving et al. 2021) |

| Staff/personnel/workforce (including volunteers*) | 11 | (Somers 2007; Jones et al. 2008; Porse 2009; Henstra 2010; Watkins et al. 2011; Davis et al. 2013; Dalnoki-Veress, McKallagat & Klebesadal 2014; Schoch-Spana, Selck & Goldberg 2015; Juanzon & Oreta 2018; Amin et al. 2019; Khan et al. 2019) |

| Training and exercises | 9 | (Mann, MacKenzie & Anderson 2004; Henstra 2010; Davis et al. 2013; Dalnoki-Veress, McKallagat & Klebesadal 2014; Djalali et al. 2014; Alexander 2015; Juanzon & Oreta 2018; Amin et al. 2019; Khan et al. 2019) |

| Legal and policy determinants | 9 | (Alexander 2005; Henstra 2010; Shoemaker et al. 2011; Davis et al. 2013; Potter et al. 2013; Djalali et al. 2014; Khan et al. 2019; Handayani et al. 2020; Dariagan, Atando & Asis 2021) |

| Cooperation and mutual aid agreements | 8 | (Henstra 2010; Watkins et al. 2011; Dalnoki-Veress, McKallagat & Klebesadal 2014; Schoch-Spana, Selck & Goldberg 2015; Connelly, Lambert & Thekdi 2016; Amin et al. 2019; Khan et al. 2019; Handayani et al. 2020) |

| Supplies and equipment | 8 | (Alexander 2005, 2015; Jones et al. 2008; Dalnoki-Veress et al. 2014; Djalali et al. 2014; Juanzon & Oreta 2018; Khan et al. 2019; Dariagan, Atando & Asis 2021) |

| Risk assessment | 7 | (Alexander 2005; Henstra 2010; Dalnoki-Veress et al. 2014; Murthy et al. 2017; Amin et al. 2019; Khan et al. 2019; Handayani et al. 2020) |

| Financial resources | 6 | (Potter et al. 2013; Dalnoki-Veress, McKallagat & Klebesadal 2014; Connelly, Lambert & Thekdi 2016; Juanzon & Oreta 2018; Khan et al. 2019; Handayani et al. 2020) |

| Evacuation and shelter | 6 | (Jones et al. 2008; Simpson 2008; Alexander 2015; Connelly, Lambert & Thekdi 2016; Greiving et al. 2021) |

| Early warning | 6 | (Simpson 2008; Djalali et al. 2014; Alexander 2015; Juanzon & Oreta 2018; Khan et al. 2019; Greiving et al. 2021) |

| Post-disaster recovery | 5 | (Alexander 2005, 2015; Somers 2007; Cao, Xiao & Zhao 2011; Handayani et al. 2020) |

| Community engagement | 4 | (Simpson 2008; Murthy et al. 2017; Juanzon & Oreta 2018; Khan et al. 2019) |

* (Davis e t al. 2013, Schoch-Spana, Selck & Goldberg 2015)

** (Alexander 2005, Henstra 2010, Connelly, Lambert & Thekdi 2016)

The majority of studies (n=16) developed a questionnaire or checklist to evaluate preparedness. Some instruments also included weighting of different indicators as well as offered a total preparedness score. The scope and number of items in the questionnaires and checklists varied widely.

Four studies provided theoretical concepts about how to measure preparedness. Alexander (2005) set up 18 criteria to formulate a standard for assessing preparedness. He suggested using them to evaluate existing plans or as guidelines when developing new ones. Diirr & Borges (2013) offered a concept for workshops in which emergency plans are evaluated. Potter and co-authors (2013) presented a framework of the needs and challenges of a preparedness evaluation tool. Khan and co-authors (2019) set up 67 evaluation indicators for local public health agencies, based on an extensive literature review and a 3-round Delphi-Process in which 33 experts participated.

Six instruments using metrics appeared in works in the sample. Cao, Xiao & Zhao (2011) used entropy-weighting to improve the TOPSIS method. A measurement tool based on the Analytical Hierarchy Process Technique was developed in each of 3 studies by Manca & Brambilla (2011), Dalnoki-Veress. McKallagat & Klebesadal (2014) and Handayani and co-authors (2020). Connelly, Lambert & Thekdi (2016) used multiple criteria and scenario analysis in their study. Porse (2009) used statistical analysis to identify significant correlations among the preparedness indicators in health districts and their demography, geography and critical infrastructure.

Three studies used other approaches. Nachtmann & Pohl (2013) developed a scorecard-based evaluation supported by a software-application. Amin and co-authors (2019) developed a fuzzy-expert-system-based framework with a corresponding software tool. Greiving and co-authors (2021) developed a guiding framework for performing preparedness evaluation through a qualitative approach using policy documents and in-depth expert interviews.

For 5 studies, literature reviews were conducted to form a knowledge base to develop the instrument. In 6 other works, expert opinions and the experiential knowledge of the authors were stated as a basis for the instruments developed. Eleven instruments were based on existing models and techniques. A literature review in combination with expert consultation was used for 7 studies.

Twenty-two studies included some sort of testing of the developed instruments.

Eleven studies included case studies. Alexander (2015) evaluated a civil protection program in a Mexican town. Nachtmann & Pohl (2013) evaluated 3 country-level emergency operations plans using their method. Cao, Xiao & Zhao (2011) calculated the level of meteorological emergency management capability of 31 provinces in China. Manca and Brambilla (2011) conducted a case study of an international road tunnel accident. Connelly, Lambert and Thekdi (2016) applied their method for the city of Rio de Janeiro in Brazil and possible threats around FIFA World Cup and Olympic Games held there. Juanzon and Oreta (2018) used their tool to assess the preparedness of the City of Santa Rosa. Simpson (2008) applied his methodology in 2 communities. Porse (2009) performed a statistical analysis of data collected in 35 health districts in Virginia, USA, to identify significant correlations among preparedness factor categories. Amin and co-authors (2019) analysed the flood management in Saudi Arabia. Dariagan, Atando and Asis (2021) assessed the preparedness for natural hazards of 92 profiled municipalities in central Philippines. Greiving and co-authors (2021) conducted case studies by analysing policy documents and conducting in-depth interviews with experts to evaluate the preparedness of Chile and Ecuador.

Eleven studies developed questionnaires, which were sent to (public) health agencies and departments. The sample size of respondents in the studies varied widely. The remaining 7 studies did not provide information about whether the instruments were tested.

Nine studies reported obtaining some kind of feedback from experts or stakeholders. The various feedback methods described were expert interviews conducted (Agboola et al. 2015), expert interviews plus a questionnaire (Amin et al. 2019), informal discussions (Jones et al. 2008, Davis et al. 2013, Diirr & Borges 2013, Shelton et al. 2013), consultation with professionals who were likely to use the tool at key milestones (Khan et al. 2019), pilot testing and incorporating feedback into final version (Watkins et al. 2011) and meetings and validation sessions (Manca & Brambilla 2011). Twenty studies did not state whether feedback from experts or stakeholders was obtained.

This review identified that a wide variety of tools for government disaster preparedness evaluation is evident in the literature. However, there is no clear or standardised approach and no consensus about what preparedness encompasses and what elements need to be present in a preparedness evaluation tool. The research is far from the goal of a simple and valid tool that is ready for use for emergency and disaster managers. The lack of dissemination in practice of most of the tools identified in the review suggests that there has been little to no involvement of disaster managers in the development process.

This study revealed an array of concepts and tools to measure and evaluate disaster preparedness at the government level. The wide range of assessment categories and topics covered demonstrates a lack of consistent terminology used in the methods sections, as noted by Wong and co-authors (2017). Many of the works in the sample focused on narrow contexts or special subject areas (e.g. legal aspects, logistics or emergency plans). Concepts for evaluating preparedness and all its components remain scarce, probably due to the great complexity and consequent scope that such tools would require. Whether it is possible to develop a single one-size-fits-all tool is questionable. Cox & Hamlen (2015) argue for several individual indices as this might give meaningful insights than one aggregated index, while also offering flexibility. A major challenge in developing a comprehensive instrument is balancing between generalisability and flexibility. According to Alexander (2015), local circumstances including ‘different legal frameworks, administrative cultures, wealth levels, local hazards, risk contexts and other variations’ have to be considered when establishing evaluation criteria (Alexander 2015, p.266, Das 2018). Therefore, developing a modular system consisting of fixed, must-have criteria as well as optional criteria is recommended. That approach would provide minimum standards and comparability as well as support individualisation by adding variables depending on the circumstances of the system to be evaluated. At the same time, a degree of simplicity is necessary in order to ensure an instrument’s widespread use.

Most of the included studies were conducted in the USA and the issue of generalisability comes into play. As disaster preparedness is a topic of relevance to any community or state, an overview of existing concepts and tools, regardless of their geographic background, is valuable. By adapting concepts of socio-cultural and legal circumstances, a preparedness evaluation concept from other countries can help improve the preparedness of another system.

Many concepts offer numerical scores for sub-areas as well as overall scores to support comparability of instruments, reveal potential for improvement and help users to assess disaster preparedness. However, the question arises whether one or a few numbers can represent the whole construct of preparedness. It is important to consider whether all factors should be considered equally or whether a weighting of components in the evaluation is necessary (Davis et al. 2013).

Another potential problem in evaluating preparedness with numeric scores is the risk of simplification. Having only a few scores and values may be helpful to form an overview of the status quo and they can be a useful instrument in discussions with policy makers or for acquiring financial resources. However, can the whole complex construct of preparedness be measured properly with only one or a few numbers? Important details could be neglected (Porse 2009, Davis et al. 2013, Khan et al. 2019). Using a mix of qualitative and quantitative measures addresses aspects of cultural factors, resource constraints, institutional structures or priorities of local stakeholders (Nelson, Lurie & Wasserman 2007; Cox & Hamlen 2015).

A considerable proportion of the studies described only partial or limited involvement of experts from within the field. Some studies used the knowledge and assessments of experts as a starting point for their concepts and some tested the instruments and asked disaster managers for their feedback. However, continuous cooperation and exchange appeared to be an exception, a problem unfortunately quite common in disaster risk reduction (Owen, Krusel & Bethune 2020). This is in line with the results by Davis and co-authors (2013) and Qari and co-authors (2019) who observed a lack of awareness and, as a result, the limited dissemination of instruments for measuring. However, all of those efforts of researchers are worth nothing if not put into practice. As Hilliard, Scott-Halsell & Palakurthi (2011) stated, ‘It is not enough to talk about preparedness and keeping people, property and organisations safe. There has to be a bridge between the concepts and the real world’ (p.642).

While effort was undertaken to achieve a comprehensive overview of the scientific knowledge base about disaster preparedness evaluation, this scoping review might not have captured all existing concepts. The search algorithm was tested but other keywords might have returned additional or different results. Due to the lack of keywording, some relevant book chapters might not have been identified. Moreover, the selection of languages (English and German) as well as the chosen timeframe of publication (1999–2021) might have reduced the number of relevant results. Results from grey literature may have been missed as only the first 100 results from the web search were used. The classification of the results of the scoping review was carried out by 2 researchers independently, however, errors may have occurred during the selection process due to the subjective evaluation of eligibility. As the focus of the review was the scientific knowledge base, concepts of practice-oriented, humanitarian institutions and organisations were not included in this review. Studies dealing with infectious disease outbreaks or epidemics were not included as their course, duration and spread are very different from disasters triggered by natural hazards or human-made disasters like terror attacks.

Although disaster preparedness evaluation has importance for practice and preparedness improvement, this study’s results indicate a lack of instruments that are ready to use. There is a broad variety of concepts and tools on offer, however, there is no standard or uniform approach. Research on evaluating preparedness has been conducted and the list of these works provides an overview of concepts. However, the goal of developing a valid as well as easy-to-use tool for measuring preparedness at the government level seems far from achieved. Many assessment tools lack dissemination and use in practice, which limits feedback from experts and practitioners. The variation in types of instruments used to measure preparedness and the diversity of questions and topics covered within the studied publications demonstrate a lack of consensus on what constitutes preparedness and how it should be measured. Any tool for evaluating preparedness needs to strike a balance between simplicity and flexibility in order to account for the different circumstances of communities as well as hazard-types. Therefore, a modular evaluation system including must-have criteria as well as optional criteria is required.

Abir M, Bell SA, Puppala N, Awad O & Moore M 2017, Setting foundations for developing disaster response metrics, Disaster Medicine and Public Health Preparedness, vol. 11, no. 4, pp.505– 509. doi:10.1017/dmp.2016.173

Agboola F, Bernard D, Savoia E & Biddinger PD 2015, Development of an online toolkit for measuring performance in health emergency response exercises, Prehospital and Disaster Medicine, vol. 30, no. 5, pp.503–508. doi:10.1017/S1049023X15005117

Alexander D 2005, Towards the development of a standard in emergency planning, Disaster Prevention and Management: An International Journal, vol. 14, no. 2, pp.158–175. doi:10.1108/09653560510595164

Alexander DE 2015, Evaluation of civil protection programmes, with a case study from Mexico, Disaster Prevention and Management, vol. 24, no. 2, pp.263–283. doi:10.1108/DPM-12-2014-0268

Amin S, Hijji M, Iqbal R, Harrop W & Chang V 2019, Fuzzy expert system-based framework for flood management in Saudi Arabia, Cluster Computing, vol. 22, pp.11723–740. doi:10.1007/s10586-017-1465-64

Arksey H & O’Malley L 2005, Scoping studies: towards a methodological framework, International Journal of Social Research Methodology, vol. 8, no. 1, pp.19–32. doi:10.1080/1364557032000119616

Asch SM, Stoto M, Mendes M, Valdez RB, Gallagher ME, Halverson P & Lurie N 2005, A review of instruments assessing public health preparedness, Public Health Reports, vol. 120, no. 5, pp.532–542.

Beccari B 2020, When do local governments reduce risk?: Knowledge gaps and a research agenda. Australian Journal of Emergency Management, vol. 35, no.3, pp.20–24.

Belfroid E, Roβkamp D, Fraser G, Swaan C & Timen A 2020, Towards defining core principles of public health emergency preparedness: Scoping review and Delphi consultation among European Union country experts, BMC Public Health, vol. 20, no. 1. doi:10.1186/s12889-020-09307-y

Cao W, Xiao H & Zhao Q 2011, The comprehensive evaluation system for meteorological disasters emergency management capability based on the entropy-weighting TOPSIS method. In ‘Proceedings of International Conference on Information Systems for Crisis Response and Management’, pp.434–439. doi:10.1109/ISCRAM.2011.6184146

Connelly EB, Lambert JH & Thekdi SA 2016, Robust Investments in Humanitarian Logistics and Supply Chains for Disaster Resilience and Sustainable Communities, Natural Hazards Review, vol. 17, no. 1. doi:10.1061/(ASCE)NH.1527-6996.0000187

Cox RS & Hamlen M 2015, Community Disaster Resilience and the Rural Resilience Index, American Behavioral Scientist, vol. 59, no. 2, pp.220–237. doi:10.1177/0002764214550297

Dalnoki-Veress F, McKallagat C & Klebesadal A 2014, Local health department planning for a radiological emergency: An application of the AHP2 tool to emergency preparedness prioritization, Public Health Reports, vol. 129, pp.136–144. doi:10.1177/00333549141296S418

Dariagan JD, Atando RB & Asis JLB 2021, Disaster preparedness of local governments in Panay Island, Philippines, Natural Hazards, vol. 105, no. 2, pp.1923–1944. doi:10.1007/s11069-020-04383-0

Das R 2018, Disaster preparedness for better response: Logistics perspectives, International Journal of Disaster Risk Reduction, vol. 31, pp.153–159. doi:10.1016/j.ijdrr.2018.05.005

Davis MV, Mays GP, Bellamy J, Bevc CA & Marti C 2013, Improving public health preparedness capacity measurement: Development of the local health department preparedness capacities assessment survey, Disaster Medicine and Public Health Preparedness, vol. 7, no. 6, pp.578–584. doi:10.1017/dmp.2013.108

Diirr B & Borges MRS 2013, Applying software engineering testing techniques to evaluate emergency plans’, in. Graduate Program in Informatics, pp.758–763.

Djalali A, Della Corte F, Foletti M, Ragazzoni L, Ripoll Gallardo A, Lupescu O, Arculeo C, von Armin G, Friedl T, Ashkenazi M, Fischer P, Hreckovski B, Khorram-Manesh A, Komadina R, Lechner K, Patru C, Burkle FM Jr & Ingrassia PL 2014, Art of Disaster Preparedness in European Union: a Survey on the Health Systems, PLoS Currents, pp.1–17.

FEMA (Federal Emergency Management Agency) 2013, National Strategy Recommendations: Future Disaster Preparedness. At: www.preventionweb.net/publication/national-strategy-recommendations-future-disaster-preparedness.

Gebbie KM, Valas J, Merrill J & Morse S 2006, Role of exercises and drills in the evaluation of public health in emergency response, Prehospital and Disaster Medicine, vol. 21 no. 3, pp.173– 182. doi:10.1017/S1049023X00003642

Gillespie D & Streeter C 1987, Conceptualizating and measuring disaster preparedness, International Journal of Mass Emergencies and Disasters, vol. 5, no. 2, pp.155–176.

Greiving S, Schödl L, Gaudry K-H, Miralles IKQ, Larraín BP, Fleischhauer M, Guerra MMJ & Tobar J 2021, Multi-risk assessment and management—a comparative study of the current state of affairs in Chile and Ecuador, Sustainability (Switzerland), vol. 13 no. 3, pp.1–23. doi:10.3390/su13031366

Haeberer M, Tsolova S, Riley P, Cano-Portero R, Rexroth U, Ciotti M & Fraser G 2020, Tools for Assessment of Country Preparedness for Public Health Emergencies: A Critical Review, Disaster Medicine and Public Health Preparedness. doi:10.1017/dmp.2020.13

Handayani NU, Sari DP, Ulkhaq MM, Nugroho AS & Hanifah A 2020, Identifying factors for assessing regional readiness level to manage natural disaster in emergency response periods. In AIP Conference Proceedings, vol. 2217, no. 1, pp.030037. doi:10.1063/5.0000904

Henstra D 2010, Evaluating Local Government Emergency Management Programs: What Framework Should Public Managers Adopt?, Public Administration Review, vol. 70, no. 2, pp.236–246.

Hilliard TW, Scott-Halsell S & Palakurthi R 2011, Core crisis preparedness measures implemented by meeting planners’, Journal of Hospitality Marketing and Management, vol. 20 no. 6, pp.638–660. doi:10.1080/19368623.2011.536077

Jones M, O’Carroll P, Thompson J & D’Ambrosio L 2008, Assessing regional public health preparedness: A new tool for considering cross-border issues, Journal of Public Health Management and Practice, vol. 14, no. 5, pp.E15–E22. doi:10.1097/01.PHH.0000333891.06259.44

Juanzon JBP & Oreta AWC 2018, An assessment on the effective preparedness and disaster response: The case of Santa Rosa City, Laguna. Procedia Engineering, vol. 212, pp.929-936. doi:10.1016/j.proeng.2018.01.120

Khan Y, Brown AD, Gagliardi AR, O’Sullivan T, Lacarte S, Henry B & Schwartz B 2019, Are we prepared? The development of performance indicators for public health emergency preparedness using a modified Delphi approach, PLoS ONE, vol. 14, no. 12, pp.1–19. doi:10.1371/journal.pone.0226489

Manca D & Brambilla S 2011, A methodology based on the Analytic Hierarchy Process for the quantitative assessment of emergency preparedness and response in road tunnels, Transport Policy, vol. 18, no. 5, pp.657–664. doi:10.1016/j.tranpol.2010.12.003

Mann NC, MacKenzie E & Anderson C 2004, Public health preparedness for mass-casualty events: A 2002 state-by-state assessment, Prehospital and Disaster Medicine, vol. 19, no. 3, pp.245–255. doi:10.1017/S1049023X00001849

McConnell A & Drennan L 2006, Mission Impossible? Planning and Preparing for Crisis, Journal of Contingencies and Crisis Management, vol. 14, no. 2, pp.59–70. doi:10.1111/j.1468-5973.2006.00482.x

McEntire D & Myers A 2004, Preparing communities for disasters: issues and processes for government readiness, Disaster Prevention and Management, vol. 13, no. 2, pp.140–152. doi:10.1108/09653560410534289

Monte BEO, Goldenfrum JA, Michel GP & de Albuquerque Cavalcanti JR 2020, Terminology of natural hazards and disasters: A review and the case of Brazil, International Journal of Disaster Risk Reduction, p.101970. doi:10.1016/j.ijdrr.2020.101970

Murthy BP, Molinari NAM, LeBlanc TT, Vagi SJ & Avchen RN 2017, Progress in Public Health Emergency Preparedness-United States, 2001-2016, American Journal of Public Health, vol. 107, no. S2, pp.S180–S185. doi:10.2105/AJPH.2017.304038

Nachtmann H & Pohl EA 2013, Transportation readiness assessment and valuation for emergency logistics, International Journal of Emergency Management, vol. 9, no. 1, pp.18–36. doi:10.1504/IJEM.2013.054099

Nelson C, Lurie N & Wasserman J 2007, Assessing public health emergency preparedness: Concepts, tools, and challenges, Annual Review of Public Health, pp.1–18. doi:10.1146/annurev.publhealth.28.021406.144054

Nelson C, Chan E, Chandra A, Sorensen P, Willis HH, Dulin S & Leuschner K 2010, Developing national standards for public health emergency preparedness with a limited evidence base, Disaster Medicine and Public Health Preparedness, vol. 4, no. 4, pp.285–290. doi:10.1001/dmp.2010.39

Obaid JM, Bailey G, Wheeler H, Meyers L, Medcalf SJ, Hansen KF, Sanger KK & Lowe JJ 2017, Utilization of Functional Exercises to Build Regional Emergency Preparedness among Rural Health Organizations in the US, Prehospital and Disaster Medicine, vol. 32, no. 2, pp.224–230. doi:10.1017/S1049023X16001527

Owen C, Krusel N & Bethune L 2020, Implementing research to support disaster risk reduction. Australian Journal of Emergency Management, vol. 35, no. 3, pp.54–61.

Peters MDJ, Godfrey CM, McInerney P, Soares CB, Khalil H & Parker D 2015, Methodology for JBI Scoping Reviews, Joanna Briggs Institute, vol. 53, no. 9, pp.0–24. doi:10.1017/CBO9781107415324.004

Porse CC 2009, Biodefense and public health preparedness in Virginia’, Biosecurity and Bioterrorism, vol. 7, no. 1, pp.73–84. doi:10.1089/bsp.2008.0041

Potter MA, Houck OC, Miner K & Shoaf K 2013, Data for preparedness metrics: Legal, economic, and operational, Journal of Public Health Management and Practice, vol. 19, no. 5, pp.S22– S27. doi:10.1097/PHH.0b013e318295e8ef

Qari SH, Yusuf HR, Groseclose SL, Leinhos MR & Carbone EG 2019, Public Health Emergency Preparedness System Evaluation Criteria and Performance Metrics: A Review of Contributions of the CDC-Funded Preparedness and Emergency Response Research Centers, Disaster Medicine and Public Health Preparedness, vol. 13, no. 3, pp.626–638. doi:10.1017/dmp.2018.110

Reifels L, Arbon P, Capon A, Handmer J, Humphrey A, Murray V & Spencer C 2018, Health and disaster risk reduction regarding the Sendai Framework. Australian Journal of Emergency Management, vol. 33, no. 1, pp.23–24.

Savoia E, Lin L, Bernard D, Klein N, James L P & Guicciardi S 2017, Public Health System Research in Public Health Emergency Preparedness in the United States (2009-2015): Actionable Knowledge Base, American Journal of Public Health, vol. 107, no. S2, pp.e1–e6. doi:10.2105/AJPH.2017.304051

Schoch-Spana M, Selck FW & Goldberg LA 2015, A national survey on health department capacity for community engagement in emergency preparedness, Journal of Public Health Management and Practice, vol. 21, no. 2, pp.196–207. doi:10.1097/PHH.0000000000000110

Shelton SR, Nelson CD, McLees AW, Mumford K & Thomas C 2013, Building performance-based accountability with limited empirical evidence: performance measurement for public health preparedness, Disaster Medicine and Public Health Preparedness, vol. 7 no. 4, pp.373–379. doi:10.1017/dmp.2013.20

Shoemaker Z, Eaton L, Petit F, Fisher R & Collins M 2011, Assessing community and region emergency-services capabilities. WIT Transactions on The Built Environment, vol. 119, pp.99–110. doi:10.2495/DMAN110101

Simpson D 2008, Disaster preparedness measures: a test case development and application, Disaster Prevention and Management, vol. 17, no. 5, pp.645–661.https://doi.org/10.1108/09653560810918658

Staupe-Delgado R & Kruke B 2017, Developing a typology of crisis preparedness, Safety and Reliability – Theory and Applications, (November), pp.366–366. doi:10.1201/9781315210469-322

Staupe-Delgado R & Kruke BI 2018, Preparedness: Unpacking and clarifying the concept, Journal of Contingencies and Crisis Management, vol. 26, no. 2, pp.212–224. doi:10.1111/1468-5973.12175

Somers S 2007, Survey and Assessment of Planning for Operational Continuity in Public Works, Public Works Management and Policy, vol. 12, no. 2, pp.451–465.https://doi.org/10.1177/1087724X07308772

Sutton J & Tierney K 2006, Disaster preparedness: concepts, guidance, and research, report, Fritz Institute Assessing Disaster Preparedness Conference, Sebastopol, CA, November, pp.3–4.

UNISDR 2015, Sendai Framework for Disaster Risk Reduction 2015-2030. Geneva. At: www.undrr.org/publication/sendai-framework-disaster-risk-reduction-2015-2030.

UNISDR 2016, Report of the open-ended intergovernmental expert working group on indicators and terminology relating to disaster risk reduction. Geneva. At: www.preventionweb.net/publication/report-open-ended-intergovernmental-expert-working-group-indicators-and-terminology.

Watkins SM, Perrotta DM, Stanbury M, Heumann M, Anderson H, Simms E & Huang M 2011, State-level emergency preparedness and response capabilities, Disaster Medicine and Public Health Preparedness, vol. 5, Suppl. 1, pp.S134–S142. https://doi.org/10.1001/dmp.2011.26

Wong DF, Spencer C, Boyd L, Burkle FM & Archer F 2017, Disaster metrics: a comprehensive framework for disaster evaluation typologies. Prehospital and Disaster Medicine, vol. 32, no. 5, pp. 501–514. https://doi.org/10.1017/S1049023X17006471

About the authors

Dr Nina Lorenzoni works as senior scientist at UMIT TIROL. Her main research areas are in organisational and individual learning and disaster management. She focuses on science communication and its impact on making policy.

Dr Stephanie Kainrath is an associate researcher at UMIT TIROL. Her research is on high-fidelity simulations.

Dr Maria Unterholzner is a medical resident at the district hospital in Reutte, Austria.

Professor Harald Stummer is Professor for Management in Healthcare at UMIT TIROL. His research focuses on behaviour in the healthcare sector and health behaviour of communities and groups.